Sep 14, 2023

About building tools for LLM agents with Flo Crivello - CEO at Lindy AI

Lindy is an AI assistant whose primary objective is to save users' time. Lindy is described as “supremely reliable and attentive to detail.” We had an interview with its creator, Flo Crivello, about his methodology in developing Lindy AI and internal tooling for the agent.

Overall approach towards building agents

Flo comments on how they are building Lindy.

“We think of our approach as two halves. An AI agent needs:

The correct collection of tools

To know how to utilize them."

They are building a framework focused on the second point in particular - integrations aren't the big risk here.

Right now, the Lindy AI team is especially focused on techniques here that will get the agent to self-improve / learn from its own experience how to use its tools better and better.“

Main use cases and ideal users

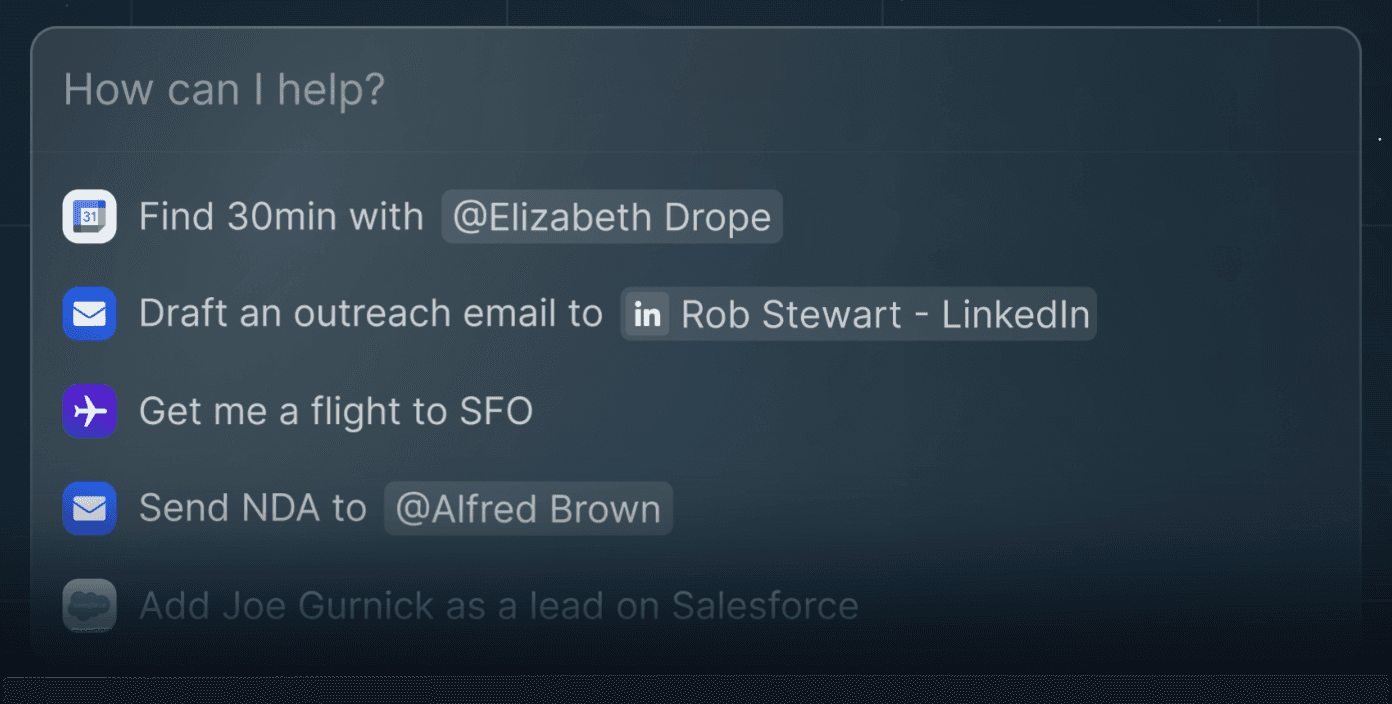

Lindy AI is a personal assistant for making a user's life more efficient. It can assist with all daily tasks, from managing user's schedule and composing emails to sending contracts, and more.

“Our ideal user is a senior manager in the technology space,” says Flo.

Examples of use cases. Source: Lindy AI landing page

Reliability

Lindy has made great progress in reliability. When unsure about a task or when about to perform a high-stakes action, the agent asks the end user for confirmation.

"These confirmations become increasingly unnecessary as time goes by, both because we make Lindy smarter, and because she learns the user's preferences," explains Flo.

Building agents tools

We asked Flo how they currently approach agent's debugging, monitoring, and tracing, what are the main struggles in this area, and how they are planning to solve them.

"We build a wide range of internal tools for example for tracing, and monitoring." shares Flo. "The agents' debugging in principle is similar to regular debugging: you examine the logs you have, try to reproduce them, then try different solutions until the bug is fixed. "

The Lindy AI team has developed all the agent tooling in-house.

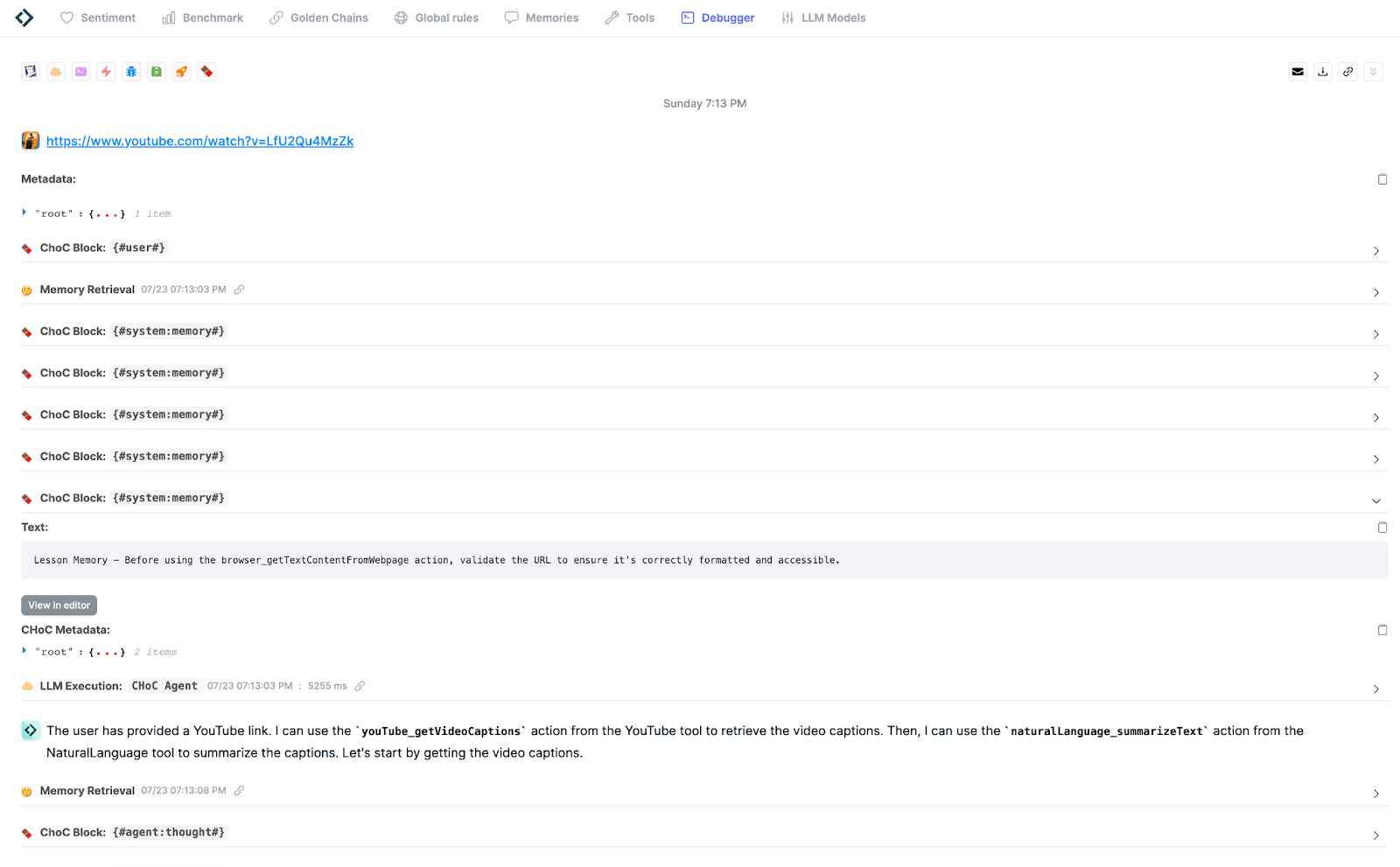

"Our most important tool is probably the tracer, which shows step by step what the agent did exactly to fulfill a user query," says Flo. "It is similar to Langsmith, but way better in our opinion."

Source: Lindy AI

Lindy AI team also has tools for:

Seeing the "lessons" that the agent is learning over time (the Memory in the screenshot above) and editing them

Reviewing the tools available, and editing the instructions about when and how to use each tool for the agent

Editing global rules or action-level rules

Monitoring benchmarks

Other challenges

During the discussion, Flo mentioned additional problems that they are presently aiming to resolve, specifically fine-tuning and cognitive architecture.

"Right now, coming up with the right cognitive architecture is insanely challenging for us," says Flo, "but we wouldn’t outsource it, since we believe that’s our purpose. "

"Fine-tuning our model is also very painful, especially because we require a big model (40B+ parameters) and a big context window (8k+)," adds Flo. "I foresee that once we have that model, deploying it for inference at scale will be another significant challenge."