Nov 7, 2023

OpenAI DevDay

This is a view by the E2B team, so the thoughts and comments are based on our experience with the AI space. E2B provides sandboxed cloud environments for AI-powered apps and agentic workflows.

Check out our sandbox runtime for LLMs.

We are open-source, so please check out our GitHub, and support us with a star. ✴️

The highly anticipated OpenAI DevDay is likely to be remembered as the biggest AI event in 2023.

For weeks, rumors have been circulating, predicting that the way we use ChatGPT is about to change completely, and the announcements will kill many AI startups.

After watching the DevDay Opening Keynote together with our community, we are discussing the major announcements.

The announcements

Sam Altman shared on the stage three major updates:

GPT-4 Turbo launch - with longer context, more control, better knowledge, new modalities, customization, and higher rate limits

GPTs - Customized versions of ChatGPT

Assistants API - a playground for building AI assistants (the GPTs)

These are the three big news in detail:

1. GPT-4 Turbo

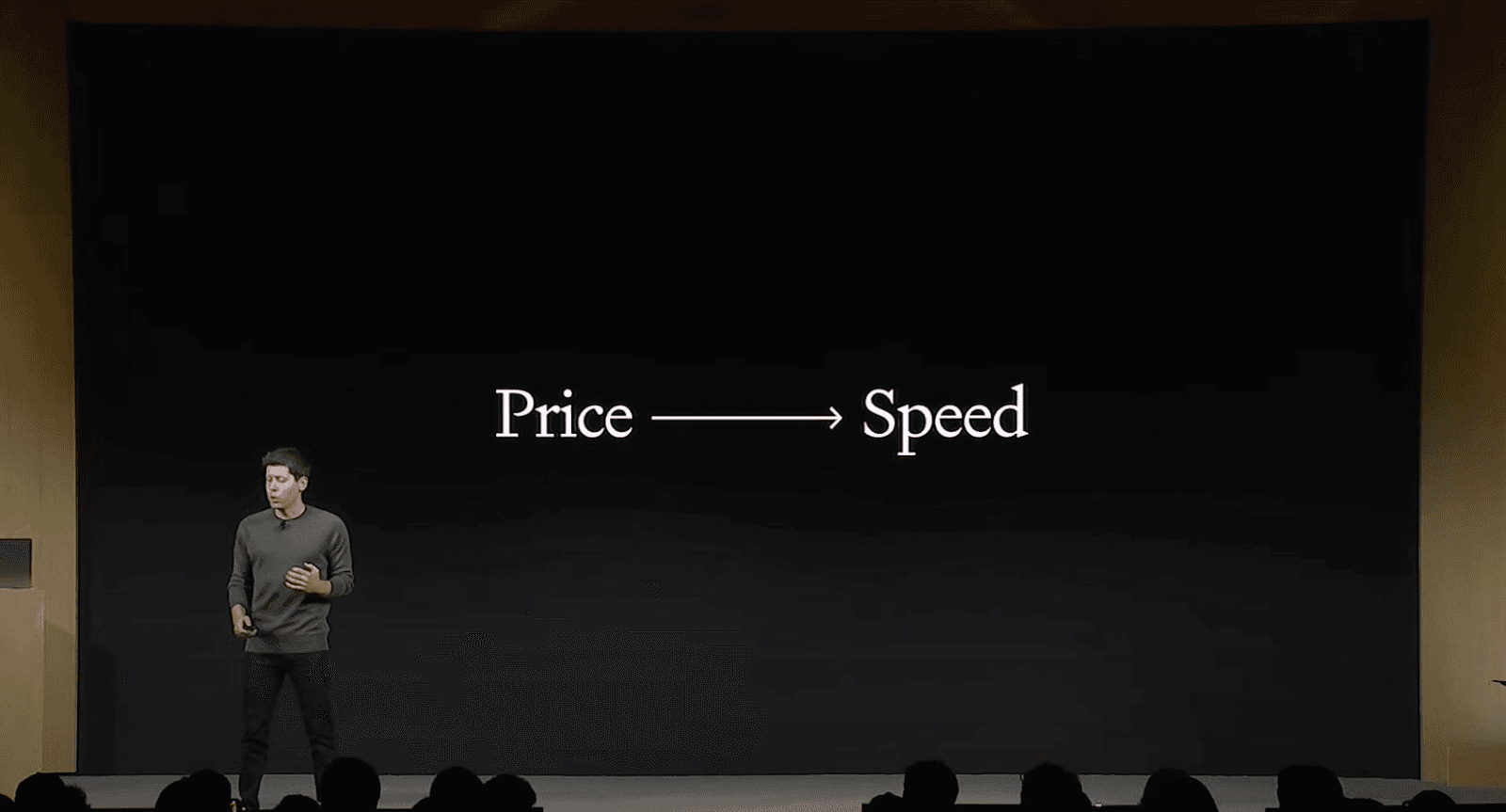

When we talked to medium/enterprise companies this year, many of them have AI projects just waiting for a lower price and better latency to launch. The new GPT-4 Turbo model is addressing this, among other things.

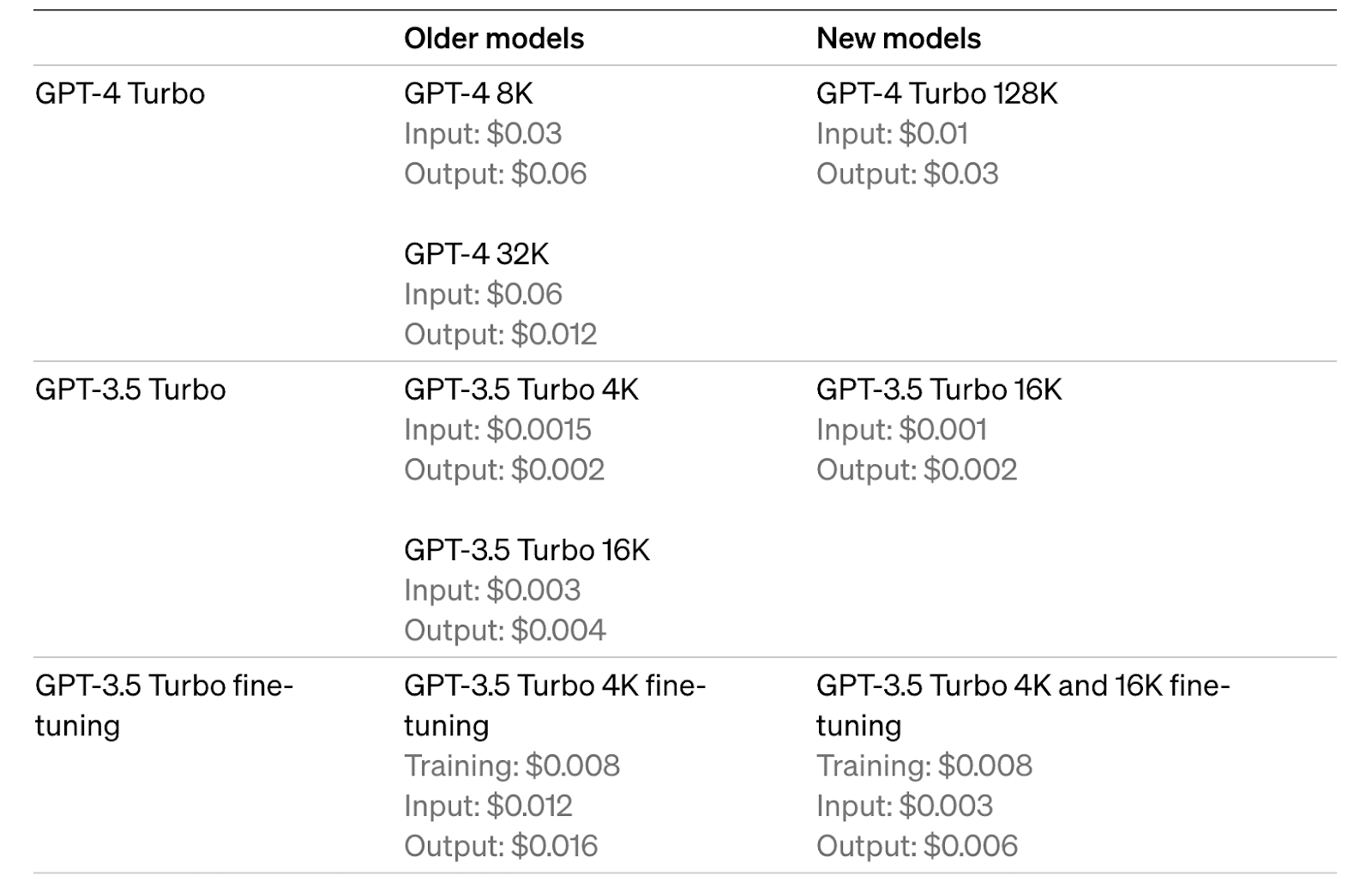

Starting now, it is much cheaper than GPT-4, in particular:

3 times less for input tokens.

2 times less for output tokens.

This is indeed great news for developers of AI agents and apps.

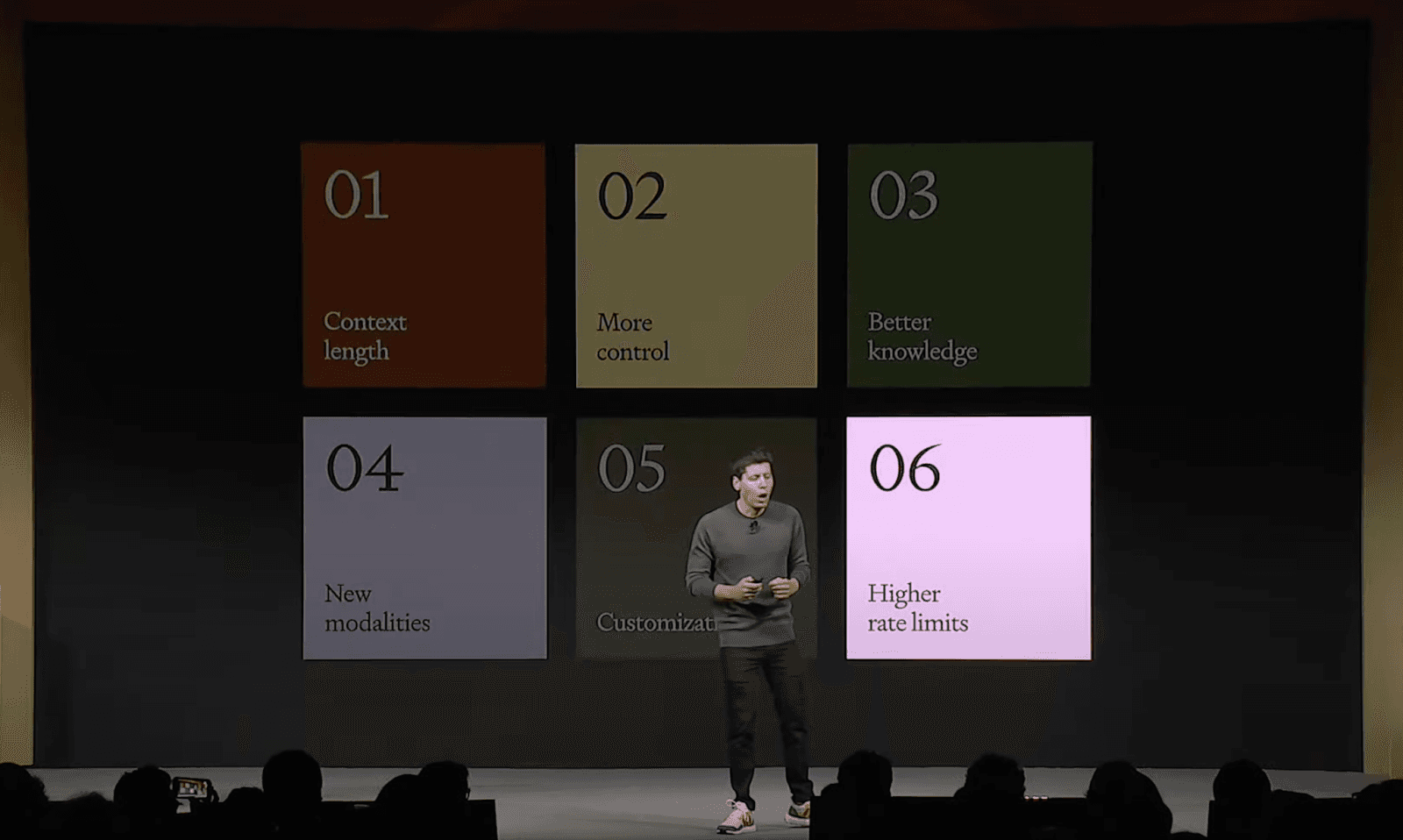

The six major updates in GPT 4 Turbo are:

01. Context length

While GPT-4 supports up to 8k (in some cases up to 32k) context length, GPT-4 Turbo offers 128k context length. That is approximately 300 pages of a standard book, so it could remember what happened to Tolkien’s hobbits throughout the book.

Sam mentioned that Turbo also is more accurate.

02. More control

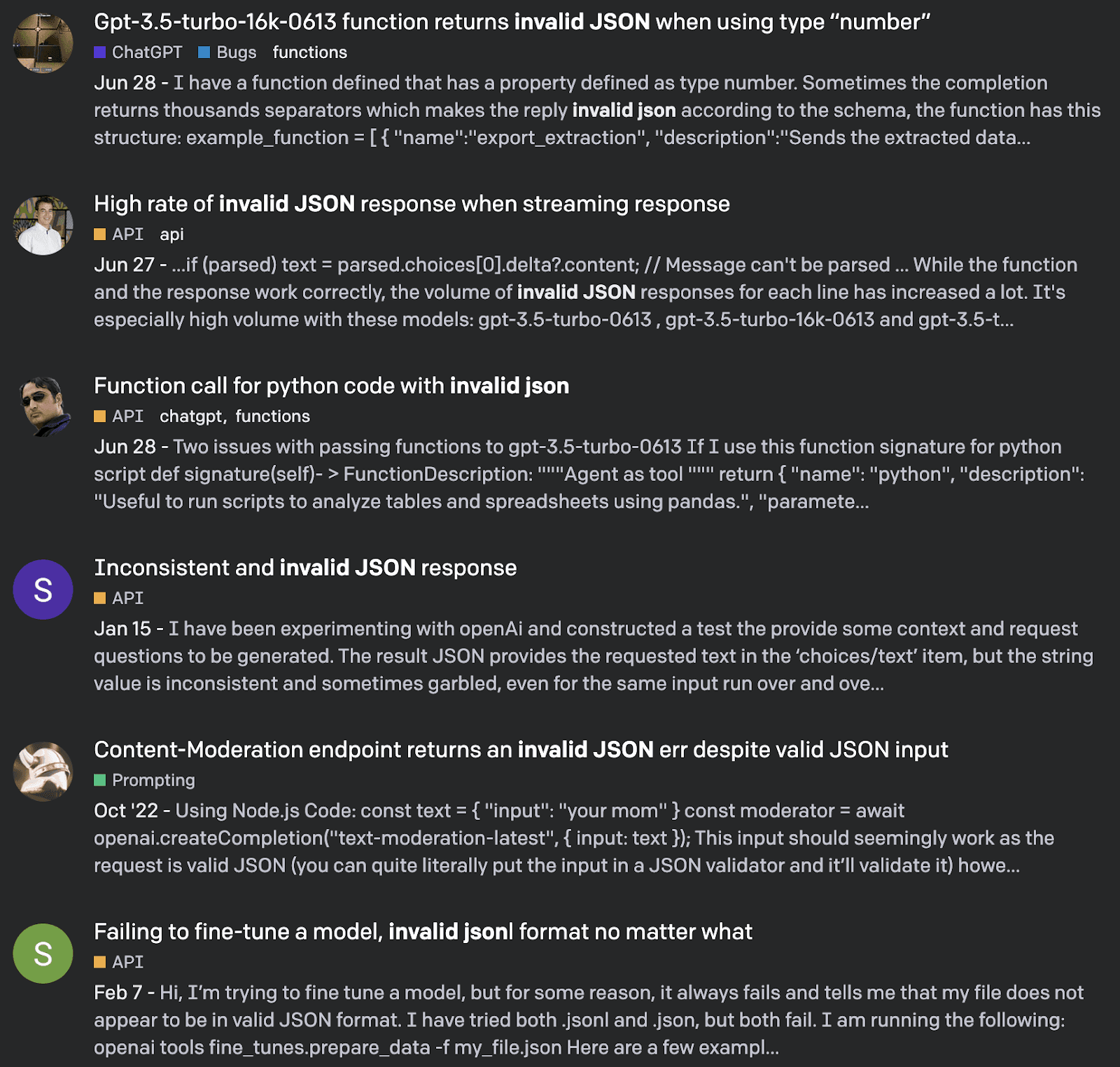

Reliability has been a huge problem for AI developers, given the unpredictable outputs of LLM models.

Addressing feedback from developers, there will be more control over the model's responses and outputs. This could partly compensate for the LLMs' stochasticity.

First, OpenAI announces a “JSON load” feature which ensures that the model will respond with valid JSON. That will make the API calls much easier.

Developers have struggled with invalid JSON output in the past. Source

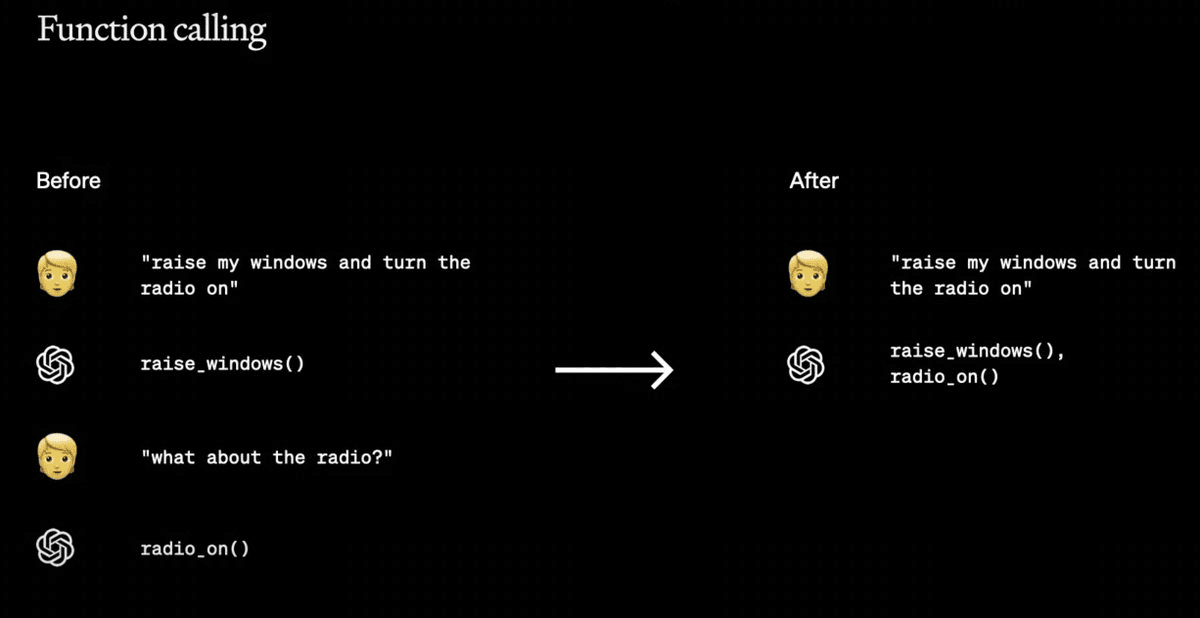

Second, the GPT-4 Turbo is significantly better at function calling. You can now call many functions at once.

The model will do better at following instructions in general.

Finally, Altman announced a beta of reproducible outputs as a new feature. That allows to pass the seed parameter to the model, which will make it return consistent outputs. That provides the user a higher degree of control over model behavior.

03. Updated knowledge

The knowledge cutoff has been extended to April 2023 and will continue to see improvements.

Additionally, OpenAI is surfing on the current hype, adding built-in RAG. RAG sparked a lot of interest among developers and became a major topic at conferences. OpenAI is now introducing a retrieval feature in its platform. This allows users to incorporate information from external documents or databases into their projects.

04. New modalities

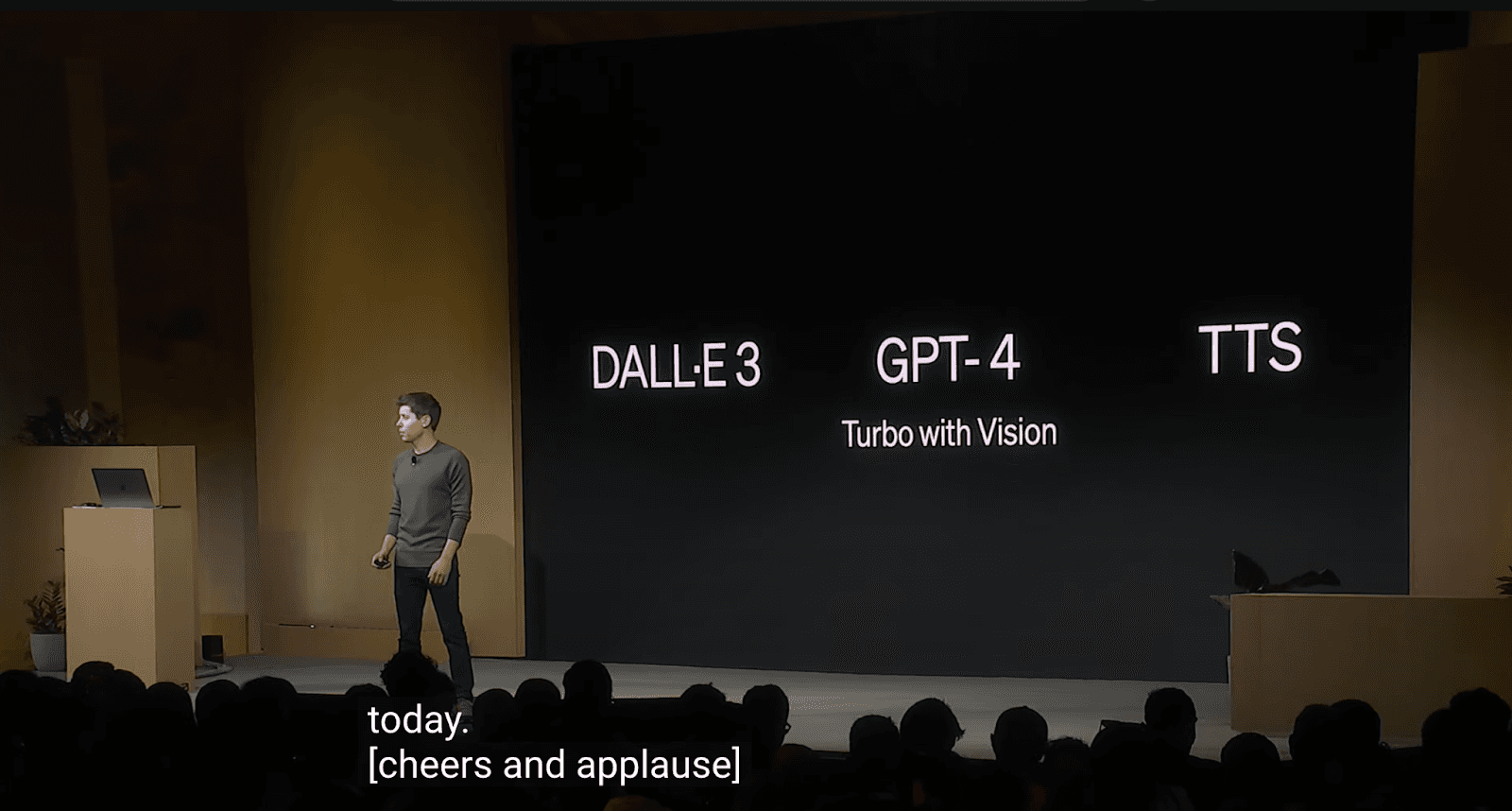

Surprising no one, DALL·E 3, GPT-4 Turbo with Vision, and the new text-to-speech (TTS) model are

all going to into the OpenAI API now.

Developers can integrate DALL·E 3 to ChatGPT Plus and Enterprise users, directly into their apps and products through our Images API by specifying dall-e-3 as the model.

05. Customization

Fine-tuning has proven to be highly effective for GPT-3.5 since its launch a few months ago. Starting immediately, OpenAI is extending this approach to the 16k version of the model.

OpenAI is inviting active fine-tuning users to apply for an experimental GPT-4 fine-tuning Custom Models program.

It should allow close collaboration between the researchers and companies to create highly customized models for specific use cases. This includes modifying all aspects of the model training process, including domain-specific pre-training and post-training tailored to a particular domain.

06. Higher rate limits

OpenAI is doubling tokens per minute for GPT-4 customers and allowing rate limit changes in API settings.

They're introducing Copyright Shield to cover legal costs for copyright claims in ChatGPT Enterprise and the API, emphasizing they don't train models using API or ChatGPT Enterprise data.

“And let me be clear,” adds Sam Altman. “This is a good time to remind people, that we do not train on data from the API or ChatGPT Enterprise ever.”

2. GPTs - Customized versions of ChatGPT

The OpenAI DevDay was the (first ever) conference for developers. However, the launch with the biggest hype, GPTs, is consumer-facing.

You may recall that OpenAI indicated in February that it intended to allow users to define their own customizable AI agents. The rumors prior to the DevDay were true - here come the OpenAI customizable GPTs.

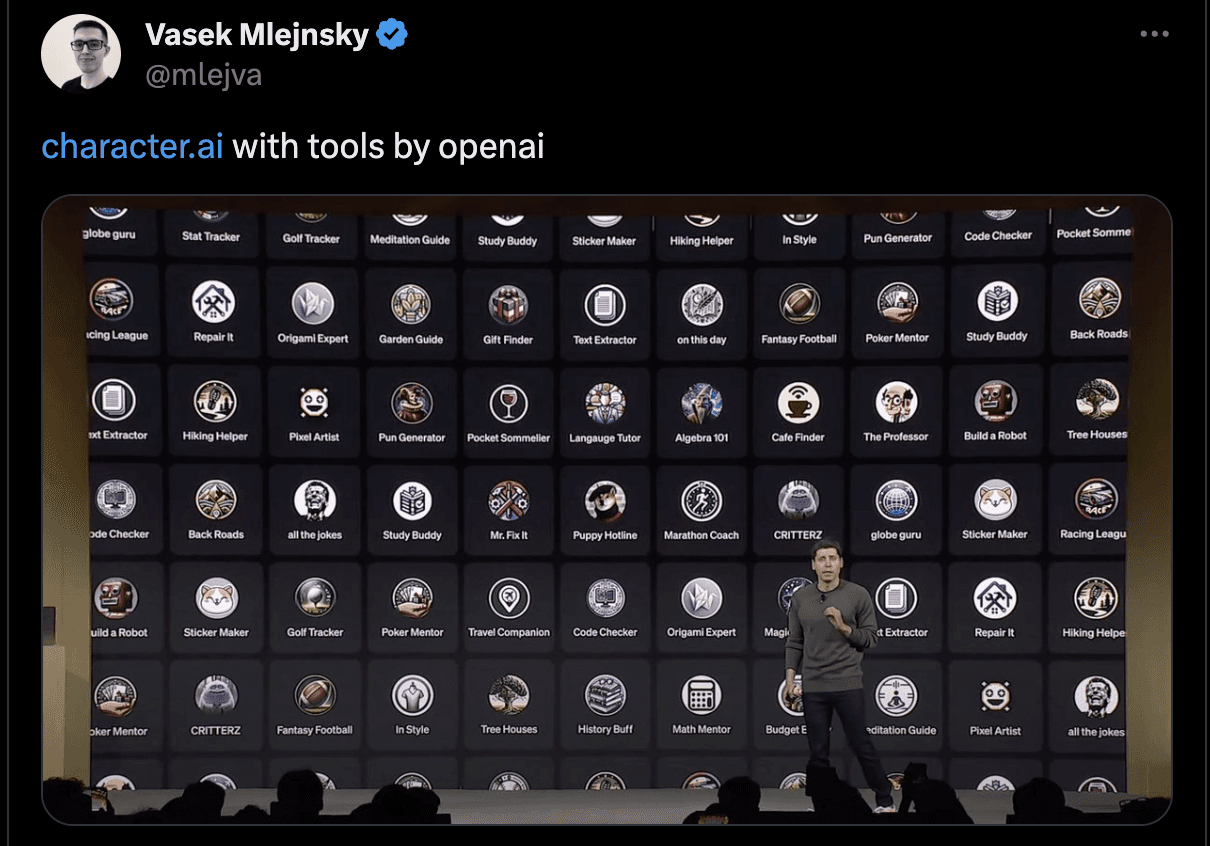

You can now create custom versions of ChatGPT without knowing how to code. GPTs combine instructions, extra knowledge, and any combination of skills.

Example GPTs available for ChatGPT Plus and Enterprise users, with more users to follow.

OpenAI decided to include the AI community that is shaping the future, proven for example by the fact that “ChatGPT Is More Famous, but Character.AI Wins on Engagement”. (Users of Character.AI - a chatbot interface where you can customize your chat avatars - allegedly spend an average of two hours per day on the site.)

GPT Store will be launched soon, featuring creations by verified builders.

Why “GPTs”?

OpenAI avoided the term “AI agent” and used "GPTs", even though they follow the characteristics of agents.

It may be due to better connecting “GPT” with already publicly accepted “ChatGPT”. “GPTs” may be more relatable to the broader public. The emphasis is put on using natural language to program.

Recall the definition of LLM Powered Autonomous Agents by Lilian Weng from OpenAI where agents were specified by

Long-term memory

Planning

Tool use.

I see some parallels with GPTs, which have:

Expanded Knowledge

Custom Instructions

Actions.

OpenAI is communicating its new product as "GPTs" while still referring to it as "agents". Source

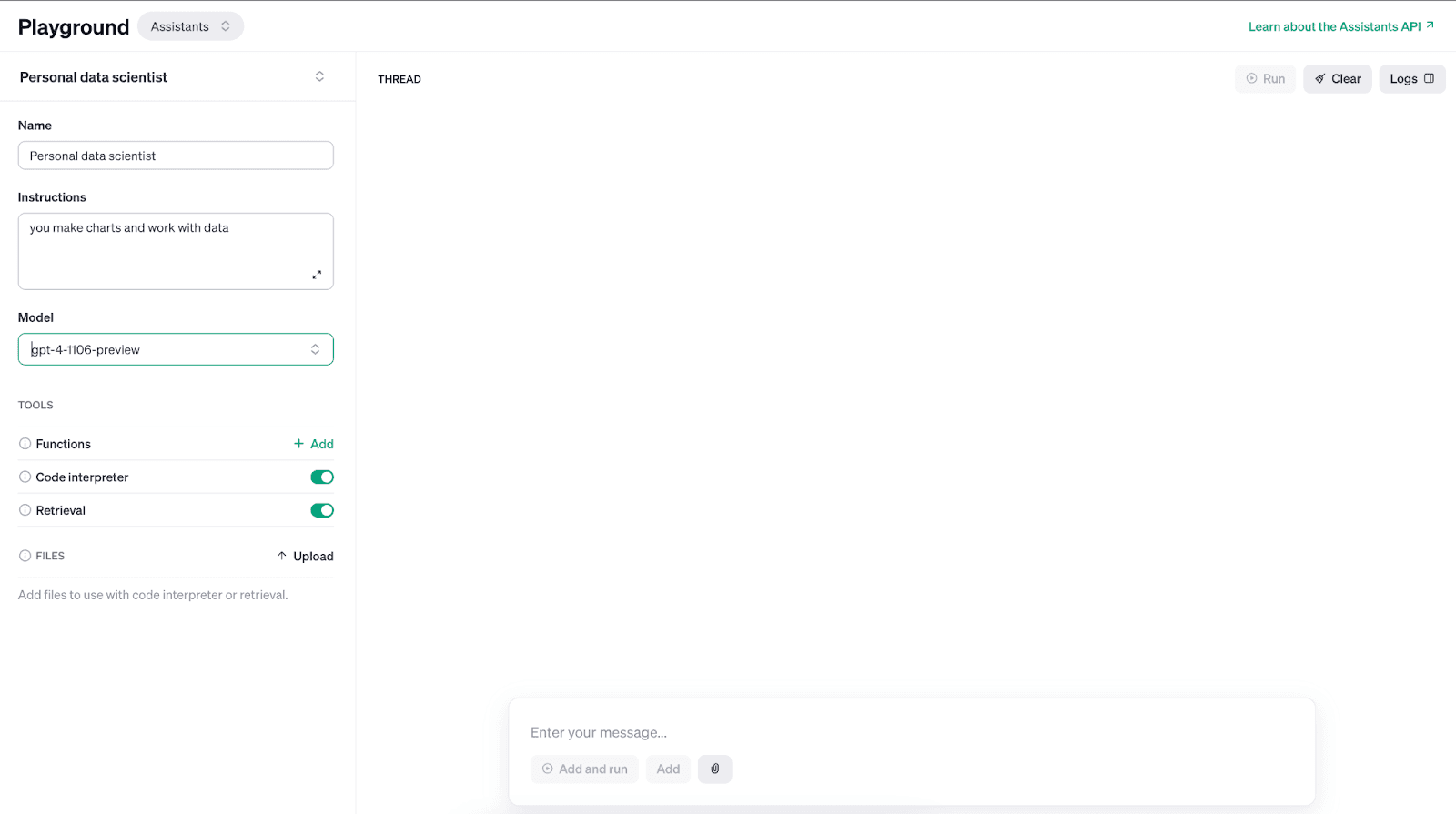

3. Assistants API

The assistants API is basically a developer-facing part of the GPTs. It works as self-coding API-level agents.

It simplifies the process for developers to create their own AI-powered assistants with well-defined objectives and the ability to call models and tools. You no longer need to include all previous messages for context when sending a new one to the API.

OpenAI also shipped a new playground to build the assistants. What is interesting to me is how OpenAI just targets both no-code "developers" and traditional developers with the Assistants API. I was expecting something more d

The Assistants API includes:

Better function calling

Built-in conversation management

Python sandbox

Memory

It offers using two tools so far:

Retrieval

Code interpreter (Which was called "Advanced Data Analyst" until recently).

We can probably expect more tools to be added soon, and there is already an option to add your custom tool. The tools are essentially just OpenAI Functions.

How is the OpenAI Code Interpreter different from E2B Sandboxes?

What are the implications?

OpenAI is shifting from focusing solely on AGI to prioritizing the commoditization of software development and building a platform. This will have a huge impact on coding and prototyping.

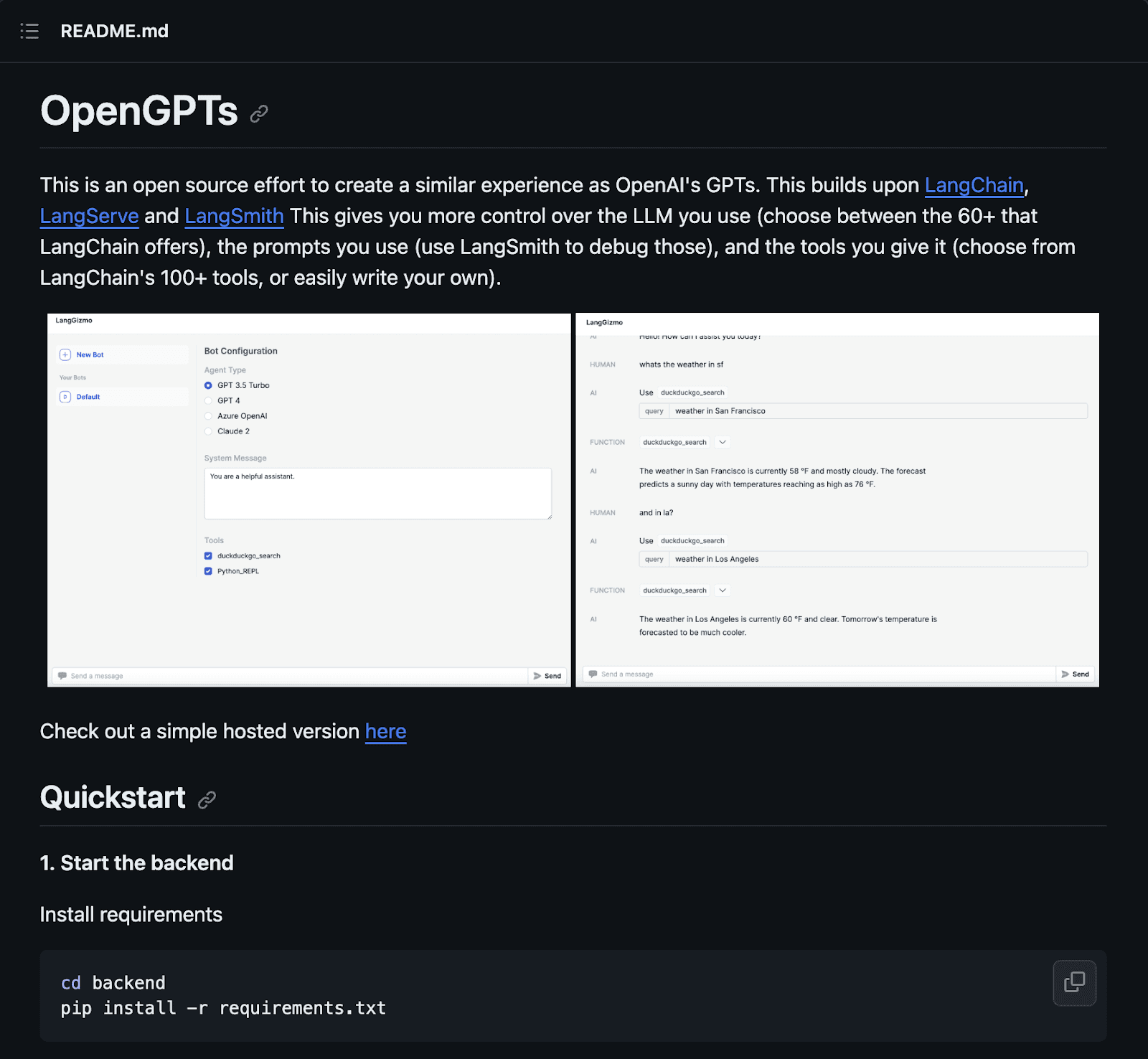

Companies react quickly to the updates. Langchain, the most popular framework for building AI agents, quickly announced OpenGPTs as an alternative to OpenAI’s GPTs.

The announcements certainly put many companies in danger, e.g. vector database startups jeopardized by the Assistants API's retrieval.

Hopefully, most startups and companies won't go out of business. Instead, we'll see even more AI companies building and adding completely new AI features to their products.

For the AI developer community, 2024 will certainly be the production year, and we are excited to see what’s coming.

About OpenAI DevDay

OpenAI’s first developer conference took place in San Francisco, CA, on November 6, 202.

The goal was to bring hundreds of developers from around the world together with the team at OpenAI to preview new tools and exchange ideas